Text to Image Synthesis

Implemented StackGAN and analyzed importance of stacked architecture.

Synthesizing photo-realistic images from text descriptions has been a challenging problem in computer vision and has many practical applications. Generated images rarely reflected the meaning of text descriptions and failed to contain important details in the text. The paper I implemented broke down the process of generation into two stages using Generative Adversarial Networks.

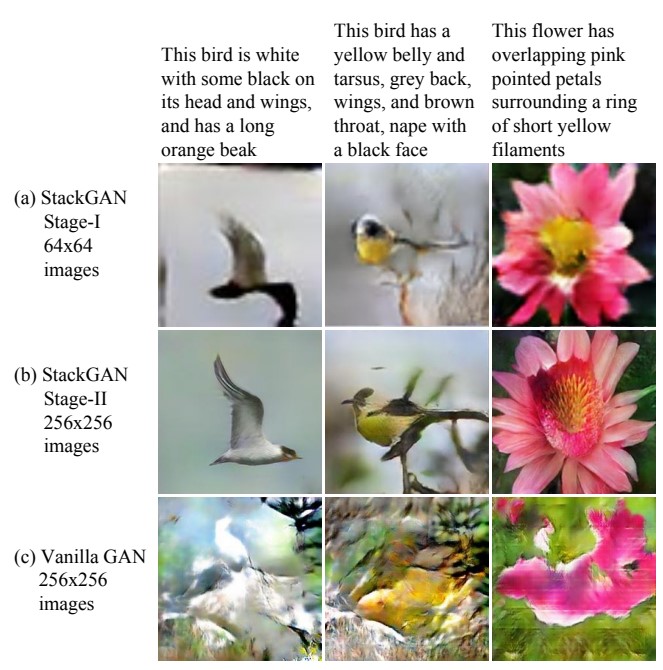

The Stage-I GAN sketches the primitive shape and colors of the object based on the given text description, yielding Stage-I low-resolution images. The Stage-II GAN takes Stage-I results and text descriptions as inputs, and generates high-resolution images with photo-realistic details as shown in the image above.

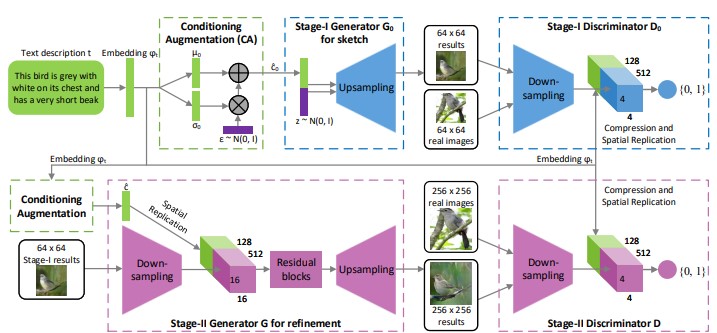

Firstly, text embeddings were generated by passing the input description through a fully connected layer. A series of up-sampling generator blocks would then generate a noisy image which was then fed to the discriminator to output a decision score. The low-resolution images from Stage I GAN along with the discriminator embedding output was given as input to Stage II GAN along with original text embeddings. After passing these through stage II Generator and Discriminator, a high resolution detailed image was outputted. The model architecture can be seen below.

The StackGAN model had better inception scores on Oxford-102 and COCO datasets than the existing state-of-the-art models then.