Human-Aware Recurrent Transformer

Evaluating importance of user-state in HaRT model on document level tasks.

Natural language is generated by people, yet traditional language modeling views words or documents as if generated independently. To include a user’s context, the Human Aware Recurrent Transformer model, a large-scale transformer model for the human language modeling task was introduced whereby a human level exists to connect sequences of documents (e.g. social media messages) and capture the notion that human language is moderated by changing human states. In this project, we evaluate the impact of the user- states in the accuracy of HaRT model on document level tasks. We conduct experiments on 2 downstream tasks - stance prediction of users on the topic of feminism and hate speech detection on social media data of users. Results on stance prediction indicate that predicting stance on the standalone user-state decreases accuracy for document-level tasks. Results on hate-speech detection on the baseline HaRT model meet or surpass the current state-of-the-art.

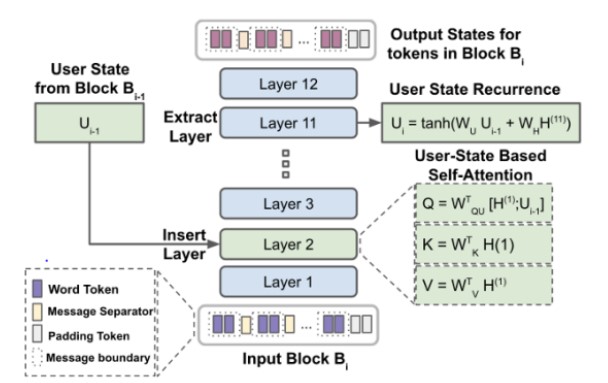

As seen above, the HaRT architecture consists of one modified transformer layer over additional token-level, conventional self-attention based transformer layers from a pre-trained GPT-2, with a user-state based self-attention mechanism. HaRT models the input data (language) in the context of its source (user) along with inter-document context, thus enabling a higher order structure representing human context.

However, currently, the HaRT model does not directly take into consideration the user state when predicting the stance or sentiment of the user and is only used to compute the self-attention block in document-level tasks. We, therfore modify the baseline HaRT model to answer the following questions:

- Can we predict the stance of an user using only its user-state representation?

- Can we group users based on the trained user state vectors?

- Can we extend the HaRT model to detect hate speech?

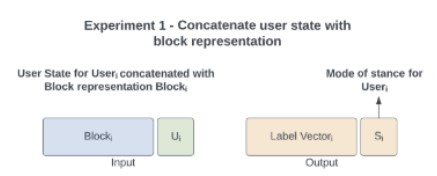

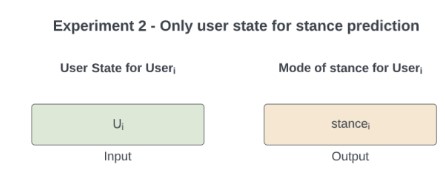

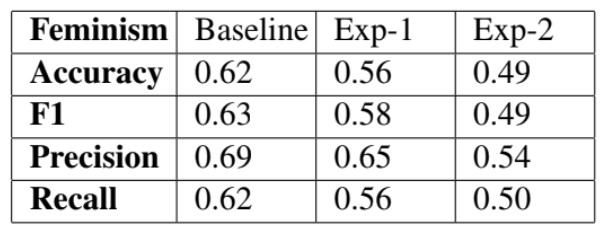

We experiment two methods of using the user-state to find out which would give better results on document-level downstream tasks.We see that the model using only the user-state vector for stance prediction performs worse when compared to the baseline model and the model using user-state with the transformer output. A possible explanation for this can be due to absence of document-level information when only using the user-state representation.

(i) Exp-1 : Concatenating user-state with transformer output. (ii) Exp-2 : Using only the user-state as output.

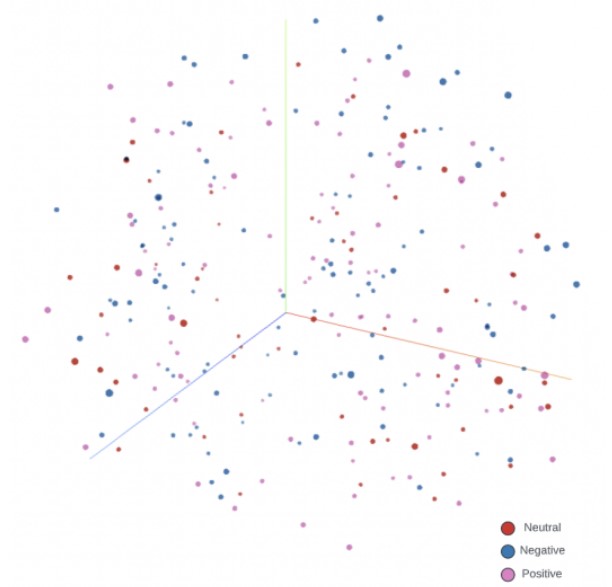

We also did a principal component analysis of 274 user-state representations to find that users states do not form clusters as per their stance on a topic as shown below.

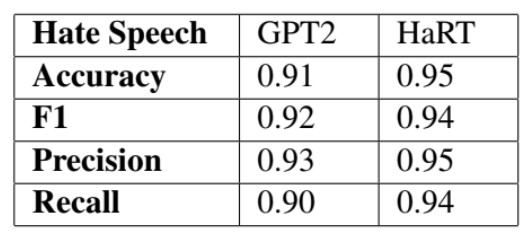

We finally compare the performance of HaRT baseline against GPT2 model on the document-level task of hate speech detection. We see that using the baseline HaRT model with user-state performs slightly better than the GPT2 model reinstating the indirect importance of user-state vector to model language by conditioning on a user-state.